Artificial intelligence is in a bubble. Probably.

The problem is, we don’t know where the bubble is or when it will pop.

Today, I want to spend some time covering AI, where I see opportunities and risks in AI, and NVIDIA’s earnings. This is, after all, a site about asymmetric investing and AI seems to fit that mold. But right now the AI frenzy means a lot of growth is already priced in, so the upside isn’t as asymmetric as I want as an investor.

This will give you a peek into how I think about AI and will be a building block for a long-term thesis in the industry.

The 4 components of AI

To give some background, I think about AI in four major — and distinct — components.

The Model: An artificial intelligence model is like the brains behind AI. Based on your inputs, it’s what figures out what word comes next or what pixels make up an image. Models can be big (over 1 TB) or small (7 GB for Stable Diffusion on an iPhone) and could run anywhere there’s enough computing power. Models can take months to train and can also be customized for specific use cases.

The Inference: A chat or image prompt is an inference. It’s a call to the model to create something.

The Hardware: Models and inferences have to run on computer hardware or chips. Today, most of that hardware is in the cloud and made by NVIDIA. But AI models may soon live on a chip in your phone, which would run the inference on-device.

The Product: What you actually interface with is the product. ChatGPT is the product while GPT-4 is the model, which runs an inference in the cloud that runs on NVIDIA chips in a Microsoft data center.

AI now and in the future

Before I get to NVIDIA’s results, I want to cover where I think we are in the AI lifecycle and where we are going. This opinion is subject to change, but it forms how I’m thinking about AI today.

AI in May 2023

Most AI models we use today are proprietary.

Most AI inference is done in the cloud.

The hardware is almost entirely NVIDIA chips, although the data center chips are housed in will change.

Outside of ChatGPT, there aren’t a lot of sure-fire product winners in AI.

A LOT of companies are throwing stuff at the wall.

The AI crystal ball

My AI thesis is built on industry shifting to the following structure:

Most AI models will be open-source in the future.

Open-source AI projects have moved much faster than proprietary technology in the last 6 months.

Any company (Meta) could simply give away its model to “scorch the earth” of competitors. They may give the model away because their business model is orthogonal to the AI model (ads) or because they’re losing market share.

Most inference will be done on-device eventually.

Apple has already written about putting Stable Diffusion on iPhones and as models get to be “good enough” for basic tasks they could be shrunk down to fit on a chip.

An on-device model could then be called by any app via API, eliminating the need for apps to build their own model or pay a cloud provider for inferences.

NVIDIA dominates AI hardware today, but EVERY company is working on custom chips and systems. I think eventually, the hardware market share will spread out.

Apple will likely lean into its custom silicon for AI.

Microsoft, Facebook, and Microsoft all have AI chip projects and a financial incentive to build custom chips for their AI data centers.

AI chip startups are getting money hand over fist.

Product: We still don’t know much about AI use cases or AI product moats.

Will we be using ChatGPT a year from now?

Will search just evolve to include AI stuff?

Will on-device AI change product development?

What haven’t we thought of? Probably a lot!

Here’s a quick video I put together about my thoughts.

AI as an investment

Given everything I have written so far, is there any part of the AI value chain that doesn’t look like it will eventually be a commodity? Are there any real moats? I don’t see any…yet.

AI models are already being commoditized. The inference would be a commodity if on-device. AI products are developed so fast (often in a number of days) that it’s hard to see a moat outside of existing moats like search and Windows 365 with AI bolted on.

Hardware is the hardest for me to assess. I could see NVIDIA taking a lion’s share of the market, but I could also see every big tech company asking themselves why they’re paying NVIDIA 10s of billions of dollars a year for something they could build in-house (I’m simplifying how easy this would be, I know, but we’re talking A LOT of money). I also don’t know what we don’t know about future AI hardware and startups.

As an investor, if I’m paying a high price for AI growth and it turns out the piece of the value chain I’m betting on gets commoditized, I’m screwed. I want AI to be a potential tailwind that provides surprising revenue growth, margin leverage, and/or stock appreciation. This is how I framed AI in the Dropbox spotlight.

As an asymmetric investor, I want stocks with FUTURE upside for the stock. I am not going to PAY a premium to buy a company where massive growth is already priced in.

On to NVIDIA

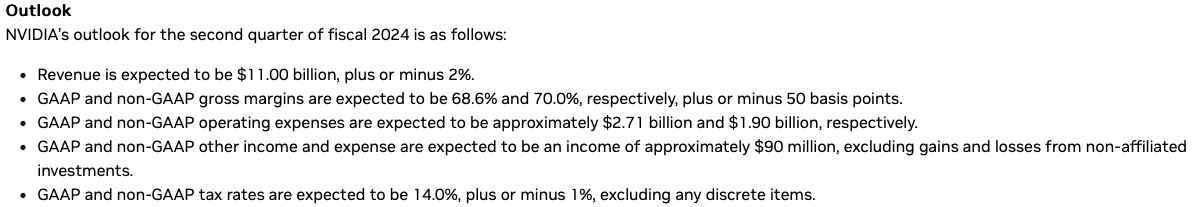

To be honest, the Q1 fiscal 2024 results weren’t that great. Revenue was down, although earnings were up. The headline numbers are here and you can see my friend Jose Najarro’s earnings breakdown here.

The ONLY thing that mattered to investors was guidance. And it was one of the most incredible segments of an earnings report I’ve ever seen. Revenue is expected to go from $7.2 billion to $11.0 billion revenue in ONE QUARTER!

Where is that growth coming from? You guessed it. Cloud AI.

when we talk about our sequential growth that were expected between Q1 and Q2, our generative AI large language models are driving this surge in demand, and it's broad-based across both our consumer Internet companies, our CSPs, our enterprises and our AI start-ups. It is also interest in both of our architectures, both of our Hopper latest architecture as well as our Ampere architecture. This is not surprising as we generally often sell both of our architectures at the same time. This is also a key area where deep recommendators are driving growth. And we also expect to see growth both in our computing as well as in our networking business.

The question isn’t IF NVIDIA will have a good year, the guidance figures show 2023 will be incredible. The question is whether NVIDIA is still the center of the AI universe in 2030 or not.

It may be, but even a $44 billion revenue run rate means the $960 billion market cap as I’m writing is a price-to-sales ratio of 22.

Like I said, I want AI upside in the future for the stock, I don’t want to pay a premium for growth. And as well as NVIDIA is performing now, I see more risk to growth than value. That’s why NVIDIA won’t be in the Asymmetric Investing Universe anytime soon.

But had this newsletter started a decade earlier it would have been a great pick! And congratulations to all shareholders. What an amazing outlook!

Disclaimer: Asymmetric Investing provides analysis and research but DOES NOT provide individual financial advice. Travis Hoium may have a position in some of the stocks mentioned. All content is for informational purposes only. Asymmetric Investing is not a registered investment, legal, or tax advisor or a broker/dealer. Trading any asset involves risk and could result in significant capital losses. Please, do your own research before acquiring stocks.